There’s a moment many technical teams will recognize even if they never talk about it. It’s late, the lab is quiet, and someone is still at their desk, coaxing a tangle of spreadsheets into a report that customers will trust. The work is good. The science is sound. But the process steals time and confidence. That’s where this story begins: with an industrial chemistry lab whose mandate was simple to say but hard to deliver—prove that a treatment prevents corrosion across complex systems—and a daily reality that made “proof” feel like a minor miracle.

The lab served operators of heavy industrial assets. On each site visit, they collected a cluster of samples from multiple points in the cycle. Back in the lab, instruments took over: metals by ICP, anions by ion chromatography, residuals by UV‑Vis, organics by TOC, and occasional solids characterized by SEM/EDS and XRD. None of that is unusual. What made life difficult was how the work had to be presented. Every sampling event needed to roll up into a single, coherent report, grouped by the customer and the specific unit they operated. Each report had to compare against a known baseline and tell a clear story: what changed, what improved, and where attention was still needed.

For a while, the team made it work with web forms, linked tabs, and a bristle of scripts that did the heavy lifting when everything lined up just right. But growth has a way of turning clever into fragile. New instruments arrived with slightly different file formats. New units added more sampling points. New colleagues joined with their own habits. The lab reached that familiar breaking point where each success felt like a rescue mission. People started to dread “report day,” not because they doubted the results, but because they doubted the system that held them.

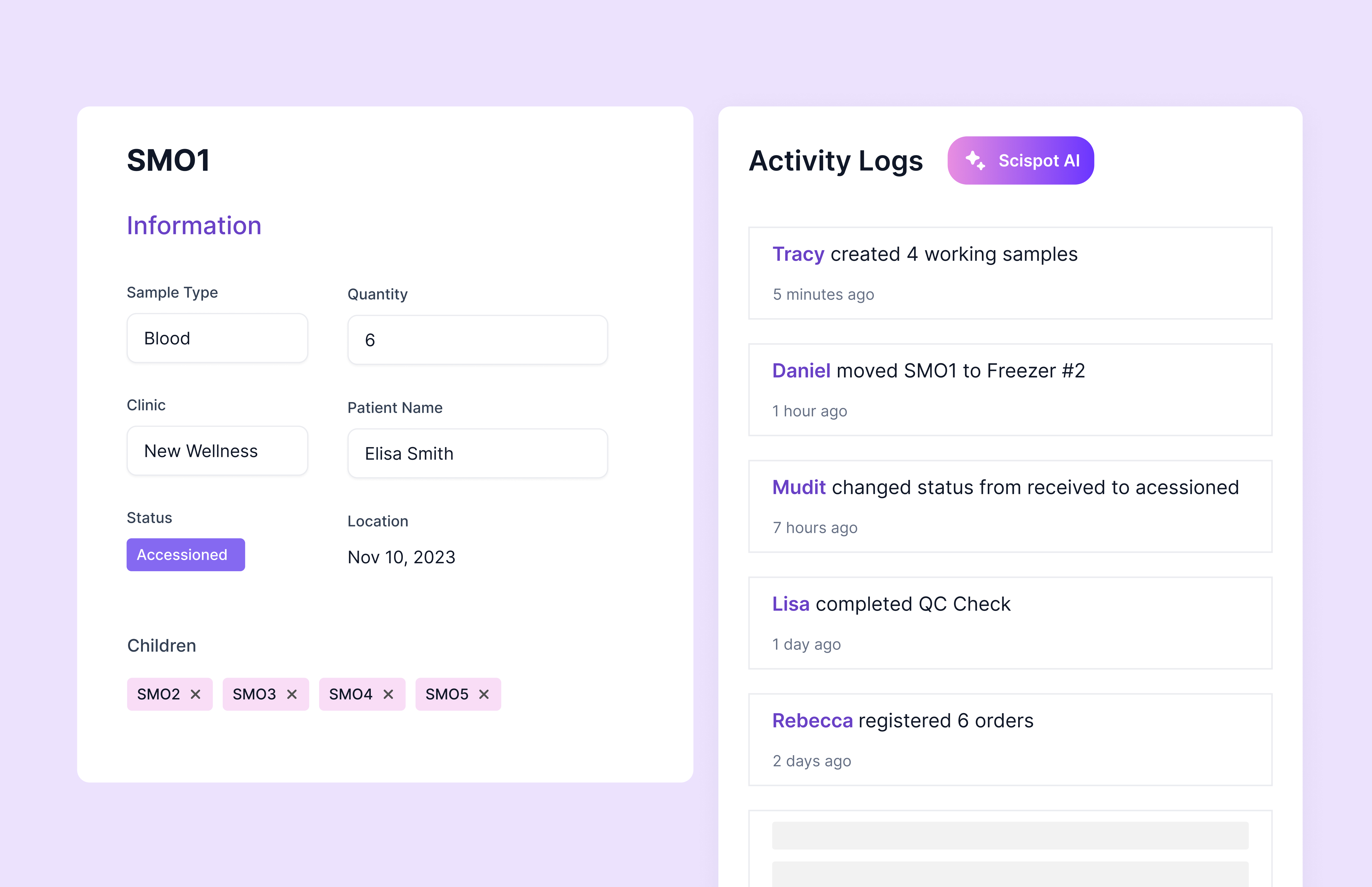

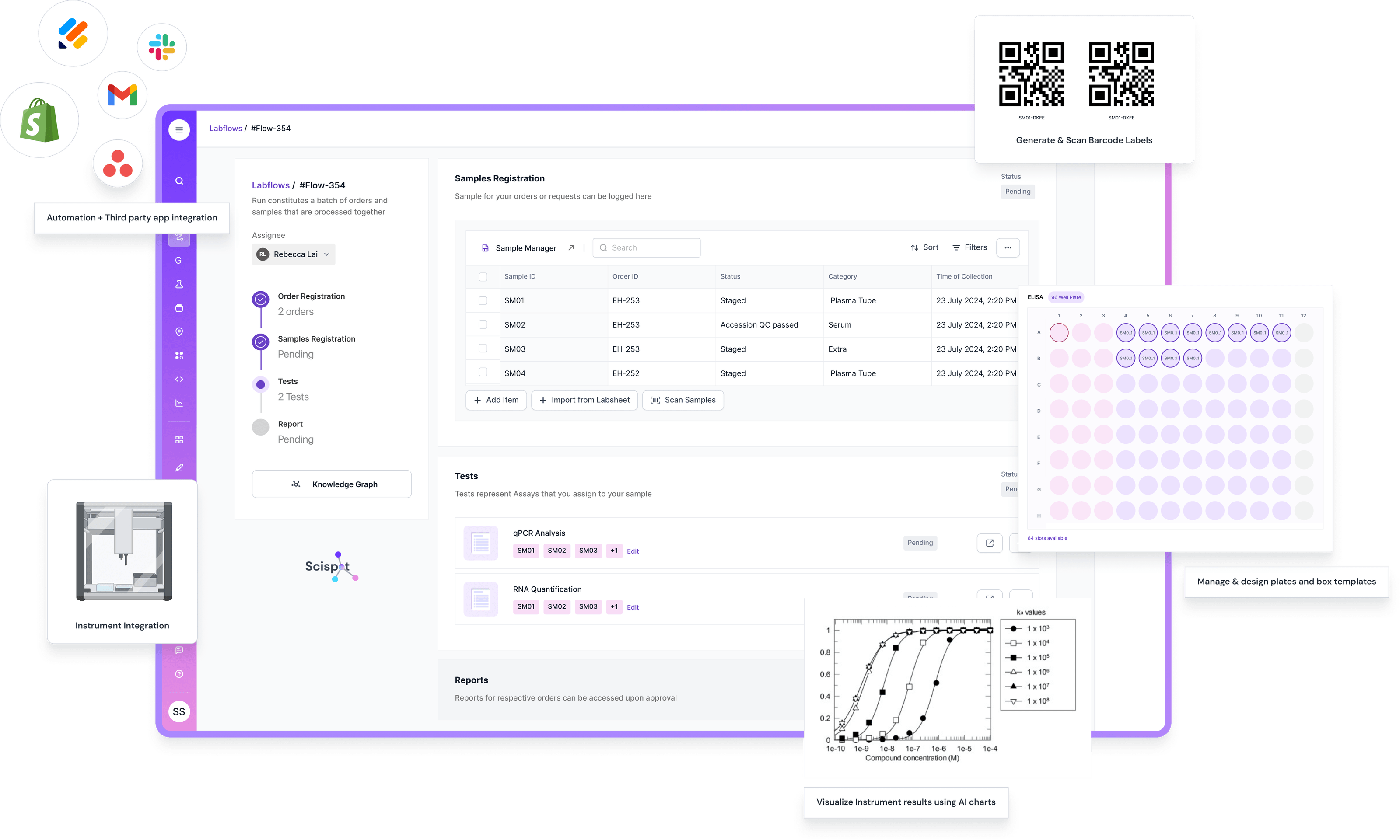

Scispot entered the picture not as a grand replatforming but as a calmer way to reflect the world the team actually lived in. Instead of forcing event‑based work into a scatter of files, Scispot organized everything around the truth of the workflow: a customer with one or more units, a sampling event attached to a moment in time, a set of samples, the analyses that apply, and the results that follow. That hierarchy sounds simple because it is, and that simplicity made all the difference. When the structure matches reality, people stop fighting the system and start relying on it.

Instrument data, long the source of late‑night stitching, began to flow in without drama. The team continued exporting the vendor files they knew, mostly CSV and text. Scispot recognized them, mapped headers to the right analytes and units, and placed each result with its sample and method. Analysts spent less time translating and more time thinking. The lab didn’t need to “throw out everything that works.” It needed a backbone that respected what already did.

Quality assurance stopped living in footnotes and screenshots. Baselines were captured once and attached to the right customer and unit. When fresh results arrived, the system calculated deltas and drew trends without anyone babysitting the math. Control checks, CRM recoveries, duplicates, and acceptance ranges traveled with the data they justified. If something drifted, the notification was quiet and early. Review and approval became a process, not a scavenger hunt for the “final‑final” file.

The part people felt most viscerally was reporting. What used to consume days now finished in hours because the assembly work disappeared. A single, grouped report for the sampling event emerged already organized by location and method, with tables that footed and figures that matched the text. Images from microscopy were in the right place, labeled and linked. Residuals lined up with metals and anions. The comparison to baseline was there, every time, without someone rebuilding it from memory. Analysts could write the actual narrative—the so‑what and the now‑what—instead of wrangling exports into shape.

None of this required a months‑long IT odyssey. The lab started by modeling its world inside Scispot as it already operated: customers, units, events, and samples. They introduced instrument imports using the formats their vendors already produced. They encoded the QC rules they lived by into simple checks and status flags. And they designed a report template that looked like them and read like science. The change management was the opposite of heroic. It was patient, realistic, and rooted in the team’s day‑to‑day work.

The emotional shift was real. The lab manager no longer had to act as a human router for five parallel workflows. The senior analyst, who had been the whisperer of arcane spreadsheet logic, reclaimed her evenings and her craft. New hires learned the lab’s way of working not by osmosis but by using a system that made the flow obvious. Customers noticed the calm. They received documents that were consistent across events, comparable to history, and anchored in the QA they expect from a partner, not a vendor.

Why was Scispot the right call for a lab like this? Because the lab’s job wasn’t to sell tests. It was to prove performance. That difference matters more than it might seem. When your value is measured by the clarity of your grouped, event‑level report—and by the trust customers place in your baselines and trends—you need a platform that treats events as first‑class, welcomes data from many instruments without ceremony, and keeps QC inseparable from the numbers. You also need governance that scales with your growth rather than fighting it: role‑based access where customers see only their data, version history you can stand behind, and a data model that won’t collapse when you add a unit, a method, or a teammate.

Spreadsheets will always have their place. They’re great for exploration and “what if.” But once your operation depends on repeatable, multi-method sampling events and customer-ready narratives, a dedicated backbone stops being a nice-to-have and becomes a guardrail. If your current “system” is a patchwork of files and heroic colleagues, you don’t have a system. You have luck. Scispot replaced luck with a predictable path from field to finding, from instrument output to insight, from raw data to proof.

Today, this anonymized team runs more events with less noise. They add instruments without re‑architecting their week. They onboard customers with confidence because the report they promise is the report they deliver. Their science didn’t change; their confidence did. And confidence is contagious. It shows up in how quickly they answer a customer’s question, how steady they are when something spikes, and how proud they feel when they click “generate” and a complete, coherent story appears.

If you lead or support a lab that validates industrial treatments and you know the quiet dread of gluing data together, consider this an invitation. You already know what you want: event‑centric organization, clean instrument ingest, baselines and trends that explain themselves, QC that lives with the numbers, and a single report that customers can act on. Scispot makes that normal. Not louder. Not flashier. Just better, every day.

.webp)

.webp)

.webp)